turbopuffer

cache money

For new folk here, hi, I’m Alex Mackenzie, a partner at Tapestry. I’d love to hear from any of you reading along: alex@tapestry.vc

A special thanks to Simon and the cool folk at turbopuffer for the platform access & for helping me write this. A fun primer to end 2025 on.

Last year, a well-known (how mysterious) database founder & I were debating investing in another database. Being quite a rare opportunity to ask someone of their ilk, “what do you look for when investing in databases?”, I took my shot. Cache hit.

Perhaps it’ll seem trite to some of you, but what he said has shaped several of my subsequent investments (e.g. Tracebit, Flox, Atlas). He said: “look for companies that ‘religions’ can be built around”.

I.e. look for companies that stand for something; what they stand for should probably p*ss a few people off. Why? Well, as I stated on Shomik’s podcast (hey Shomik) a year or so ago, “as degree of opinion goes up, CAC goes down.” People talk about you because they love ya, or talk about you because they hate ya. Par example, Flox is built on the “philosophy” that is Nix:

Currently in the data realm there are two “religions” that I’m tracking. Skip (portfolio) is a framework for creating reactive software, which responds in real-time to changing inputs. One way to think about this is “streaming, without the streams”. You can only imagine how Kafka zealots respond to such a claim! A primer on Skip will come soon.

The other religion forming is, as my friend Justin recently put it, “object storage is all you need”. You should watch his full presentation, but the tl;dr is that object (or, “blob”) storage (think S3) is having another “moment”, whereby open table formats (e.g. Iceberg), the proliferation of SSDs (vs. HDDs), and compatibility with modern query engines have bestowed blob storage’s 99.999999999% durability with a little more.. pace. Nice.

But how does one spot a religion? & better yet, how does one spot “companies that ‘religions’ can be built around”? In dev tools, infra, cyber, etc., my general learning is that everything washes out in developer docs. Jump into an architecture diagram & you’ll see if a company really stands for something. Seek out their explicit trade-offs across speed, cost, usability, etc. Don’t bother looking at landing pages.

So, last week, I decided to do some.. washing. I jumped into turbopuffer’s docs and it became clear that they’re a disciple of “only needing” object storage. Or, as Matt Klein put it, they’re leveraging the “blob store first architecture”. With customers like Notion, Suno, Cursor (primer here), Readwise, etc., it seems like this movement has legs. Let’s dig into “tpuf” under the hood and see what we think for ourselves? <(°O°)>

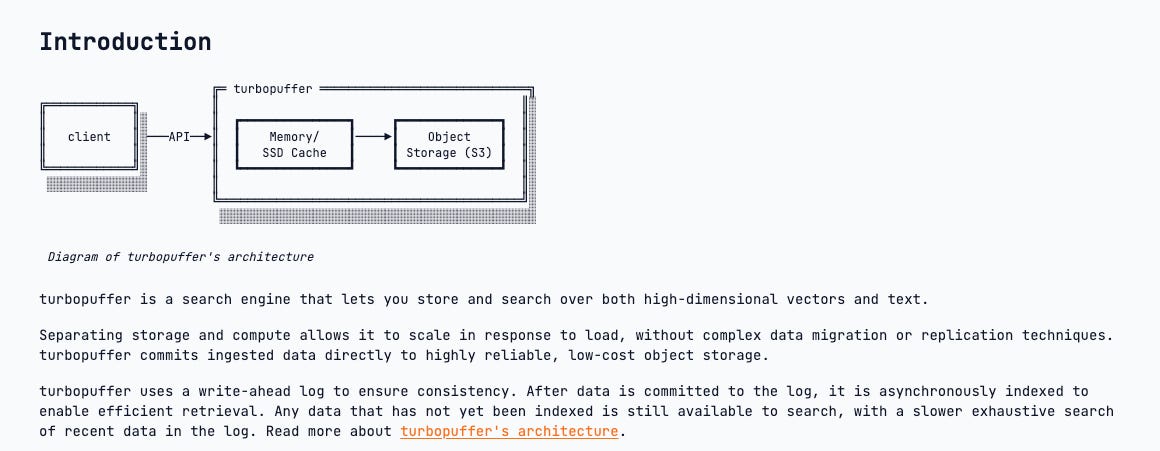

Ok, as always, let’s begin by seeing how turbopuffer describes itself. There’s quite a bit of detail in this description but, don’t worry, we’ll break it down piece-by-piece:

Cool site, huh. Let’s tackle object storage first. But we’re going to start counter-intuitively, and briefly touch upon storage hardware (e.g. SSDs).

Why? Well, there are layers-upon-layers of abstractions in data management that can make things quite complex. However, knowing that data is generally stored the same way on a given piece of hardware (irrespective of a high-level abstractions like object storage) gives us a good base to return to.

So, how is data ultimately stored? Like most things in infra: it depends. SSDs (solid state drives) encode and store data as electrical charges (watch this); HDDs (hard disk drives) use magnetic “domains” to store bits on spinning platters (watch this).

Ok, back to object storage. There are three primary (aka “the big three”) data storage abstractions or “models”: 1) file storage, 2) block storage, and 3), object storage. Think of these models as software “layers” that enable you (or your software) to reason about, and interface with, data physically contained within storage hardware.

We’re all familiar with file storage (even if you don’t know it yet). In file storage, we create folders (e.g. “why_now_primers”), and within these folders (or “directories”) files are stored that contain data (e.g. “subscribers.csv”):

File Storage System

└── why_now_primers/ # Folder (Directory)

├── subscribers.csv # File

│ ├── Name, Email, Subscription Date

│ ├── Warren Buffett, wb@berkshirehathaway.com, 2023-01-01

│ └── Howard Marks, hm@oaktreecapital.com, 2023-02-01

└── readme.txt # Another FileThanks, WB. If you’re a Mac user you use a specific file system implementation known as APFS, or, “Apple File System” (how, err.. creative). Ok, we understand how this software models our data, but let’s walkthrough how it interfaces with it.

Let’s say we want to open that “readme.txt” file up above. When we do so in an app like TextEdit, it makes a “system call” (have a whole primer on these guys), to the macOS kernel. Then, the kernel hits up APFS and (essentially) says: get me the metadata that maps to this file path: alex/desktop/why_now_primers/readme.txt.

Buckle up. Included in this metadata is what’s known as the “logical block address” or (“LBA”). This is a virtual representation of where readme.txt’s data is physically stored (the “physical block address”). Why bother to virtualise? I asked the same thing. You see, for reasons that are beyond the scope of this primer, our data’s “PBA” changes dynamically. So, using our LBA provides us with, you guessed it, a helpful abstraction.

Nice, I guess we’ve got file storage down. The thing is… unbeknownst to you, we also just tackled block storage. Ha! Block storage is literally “just” the LBAs we discussed up top. I.e. block storage has no human-friendly abstractions like files & de minimis metadata.

Just like TextEdit, databases from SurrealDB to RocksDB access LBAs via the file system. However, certain databases like Oracle’s Real Application Clusters (“RAC”) expose underlying LBAs directly to the database engine, removing the abstraction & hence, ameliorating performance (but creating maintenance overhead, of course).

The below describes the relationship between file and object storage quite nicely:

+----------------------------+

| Application Layer |

+----------------------------+

|

v

+----------------------------+

| File System (NTFS, |

| ext4, HFS+, etc.) |

+----------------------------+

|

v

+----------------------------+

| Block Storage |

| |

| +--------+ +--------+ |

| | Block | | Block | |

| | 1 | | 2 | |

| +--------+ +--------+ |

| +--------+ +--------+ |

| | Block | | Block | |

| | 3 | | 4 | |

| +--------+ +--------+ |

+----------------------------+

|

v

+----------------------------+

| Physical Storage |

| (HDD, SSD, Storage Array) |

+----------------------------+Alright, I guess we should probably address object storage now. So, to repeat, object storage is just another model and interface for interacting with data stored on storage hardware like SSDs. So.. what’s different about it?

Well, first off, unlike file storage, where data is stored hierarchically (i.e. in a “tree” structure), object storage consists of a “flat” namespace. This means that we don’t have folders, but instead, we have a primary key (i.e. a unique identifier) that maps directly to the underlying data, and metadata. Par example:

why_now_primers/subscribers.csv: {"author": "admin", "description": "Subscriber list"}But wait. The “why_now_primers/subscribers.csv” key sure looks like a folder? This is where I kept on getting tripped up too! To us, as mere mortals, these keys are made to look like they have hierarchical structures (for legibility reasons), but our system views them as a “pointer” to a specific piece of data. Blob storage is an “unstructured pool” of data.

I’m aware this sounds trivial. But, it has a rather material impact on object storage’s performance and scalability vs. file storage. Pourquoi? Well, when you store files in a tree structure, the storage system has to follow (or “traverse”) the tree, in order to get the data. These trees can get pretty long if you have hundreds of files. Check out the performance hit below. Yikes.

Ok, we understand why file storage’s query performance degrades. Why does blob storage hold up though? Well, the reason I find most instructive is how it handles metadata.

Let’s just take a step back for a sec. What is metadata (e.g. a timestamp) but a useful way to help describe, filter, or “query” for, some data? So, if we’re trying to optimise query performance, one way to do it is (surprise, surprise) to optimise how effective our storage system is at adding metadata.

Now remember, object storage’s metadata is included in the object itself. This stands in contrast to file storage which stores its metadata in a centralised directory table that’s shared between folders and files. Hence, as the number of files increases within object storage, there’s no centralised directory table (i.e. server) that’s “overwhelmed”. Nice.

But wait, there’s more. Object storage also enables you to add custom metadata (e.g. the camera used for a snap) to your data, whereas file storage has a fixed set of metadata that you can add. See the code for creating an S3 bucket below:

s3.put_object(

Bucket='my-bucket',

Key='my-image.jpg',

Body=open('my-image.jpg', 'rb'),

Metadata={

'author': 'A Mack',

'camera': 'Canon EOS 5D Mark IV',

'location': 'London init bruv, UK',

'tags': 'landscape,sunset'

}

)Have a think for a sec why custom metadata can help query performance as the no. of files (or data) grows? Remember, metadata is a filter. So, if we want to query a dataset like every Flickr image ever, and search for photos taken in Europe, having location metadata like “London” above helps the cause.

Nice one, we now have a pretty deep understanding of object storage. Let’s scrape it off our to-do list. Not to mention storage hardware like SSDs (although there’s more to come here). Let’s come back to our turbopuffer definition. Next, we’re going to tackle separating storage & compute.

Data is sometimes stored on the same machine that processes it. E.g. on your laptop you have an HDD (storage) and a CPU (processing). If you use something like a Mac or MSFT Surface (sike!), storage and compute are tightly coupled. If you want to improve your storage, you’ll likely need to replace your entire machine. This used to be the case with mainframes too.

However, what if we could decouple this relationship? Then, if we only need more storage, we can add some, vs. also having to add compute capacity (i.e. replace our machine) (i.e “scale vertically”).

This decoupling would enable us to scale storage and compute up/down to match demand (i.e. add more/less servers) (i.e. “scale horizontally”). Sounds a bit like the promise of the cloud, huh? Bingo.

However, “back in the day” having physically separate storage and compute resulted in increased latency, of course. It wasn’t until improved networking hardware (ethernet to fiber) & software (RDMA and RoCE) that made data transfer speeds viable. RDMA & RoCE are beyond the scope of this primer, but are worth digging into. Cool stuff.

“Virtualisation” (i.e. partitioning hardware resources) also made scaling compute flexible, enabling multiple “VMs” to run on the same physical server. Want more compute? Cool, “here’s another VM”. Not, “here’s another server”. Nice.

In many ways, Snowflake or Databricks’ “religion” was the separation of storage and compute. And yeno what storage model “horizontally scales” particularly well? A Simple Storage Service indeed.

Alright. We’re doing well. That’s object storage and the separation of storage and compute done here. Again, we’ll go with the slightly counter-intuitive route and tackle some of the more esoteric items like write-ahead logs & asynchronous indexing prior to talking about tpuf directly and connecting all of these concepts together <(°O°)>

This stuff sounds hard, but tbh, it’s kinda easy. I’m going to get in trouble for saying this, but you can sub out the word “log”, for “queue”. I.e. they’re data structures that preserve the order of things. We dealt with queues in my primer on Restate & Durable Execution (isn’t it cool how all of these topics connect).

So, when turbopuffer receives some new data, it first “writes” this data to a log, prior to writing it to its “primary storage” (i.e. “vanilla” object storage). Hence, it’s a write-ahead log.

For context, I say “vanilla” because tpuf’s write-ahead log (or, “WAL”) lives within object storage itself. So, think of it as an another abstraction on top of object storage:

Hmm. Why doesn’t tpuf just write data directly to its primary storage? Remember, all infra comes with trade offs. So, as rosy of a picture as I’ve painted of object storage, it has its flaws. We won’t dig into all of these flaws, but something rather important that object storage doesn’t fully (nuance here) support on its own is “atomic” transactions (logs do).

Writes are considered “atomic” when only once all of the related data in a transaction (think both debit and credit operations in a bank transfer) are complete. “Atomicity” is pretty important, as otherwise your storage system is prone to “partial writes” (think the debit operation happening, but not the credit). Partial writes = “corrupted” data. So, turbopuffer navigates this issue with a write-ahead log (or “WAL”). Nice job tpuf.

There’s a tonne more we could talk about here (alex@tapestry.vc) but let’s move onto asynchronous indexing. Ok, so, just like at the library, we use indexes in databases to speed up our searches (i.e. queries). Think of them as filters. Easy.

Next, to get your head around “asynchronous” do what I did & replace asynchronous with independent. I.e. when we update our index (post writing to the WAL), updating the index doesn’t hold up any new operations like a new write or query. Independence.

Pretty cool that we can now strike: separating storage and compute, object storage, write-ahead logs and asynchronous indexing off of our list. There are a few other gnarlier topics within tpuf’s architecture that I wasn’t going to dig into (“don’t over-load the reader Alex!”); but then I remembered… whilst I’m grateful for all of you that read along, I honestly write all of this for me first tbh. Sorry!

So, uh.. let’s touch on a few other items and then I promise we’ll actually take tpuf for a t-spin and build something cool with it. I have some fun ideas. Ok, back to some tpuf architecture topics below. Rapid fire.

Visualise the above using the image below. That “./tpuf” is just their API (written in Rust fwiw). Caching (or “cache locality”) is easy, so let’s not dig into that. NVMe SSDs were new to me, so let’s linger a bit here. Traditional SSDs use the “SATA” bus. Buses (think cables, controllers, protocols, etc) are how hardware like RAM connects to other components like the CPU.

The “SATA” bus was originally built for HDDs, so it’s been knockin’ around for a bit. & more importantly, it isn’t optimised, for SSDs (was never really built for ‘em). NVMe SSDs, however, are capable of using the more performant “PCIe” bus. These SSDs hit GA on AWS in 2018 (h/t Simon). Hence, tpuf’s cache gets a nice performance bump.

Ok, next. Let’s revisit our index (remember, the “asynchronous” guy) and understand “ANN” and “inverted BM25” indices. I bloody love all of the optimisation work this team has done (& shared). Religion building washes out in the developer docs!

You might recall “nearest neighbours” from your probability classes. Let’s do a quick refresher. Imagine we have a query vector (e.g. an image encoded as a point in a multi-dimensional space) (e.g. a picture of these Niobium C_3s), we might want our website to recommend “related products”:

We could do this ourselves manually (i.e. tag one product as related to another), but imagine this overhead at Kith-scale! So, instead, we take this query vector (remember, point in space), and find other items (i.e. other points in space) that are approximately the closest (i.e. nearest) to the our Niobiums. Think of ANN as a (alternative methods exist) series of steps that helps us make this “distance” calculation.

Next. What’s an inverted BM25 index? This is a technique that I wasn’t familiar with, but again, it sounds more intimidating than it actually is. It’s considered “inverted” as it flips the usual function of an index. Instead of storing “documents” (think ~files) & their contents (i.e. data) it stores “terms” (e.g. text like “rubberized” or “toecap”) & a list of “document IDs” that contain said term. Par example, let’s say we have three documents in our Kith database:

document 1: "Rubberized heel blue"

document 2: "Toecap chrome finish"

document 3: "Rubberized compressed toecap with t99 cut"Our inverted index would look like:

"rubberized": [1, 3] <--- this array is known as a "posting list"

"toecap" : [2, 3]

"heel" : [1]

... u get my driftThe “BM25” (or Best Matching 25) part is a “scoring function” widely used by search engines to rank documents by relevance to a query. This function takes into account variables like: 1) term frequency, 2) document length, 3) how common/rare terms are, etc etc. It should hopefully be clear which document above would be considered most relevant to a query like “Rubberized toecap”. Math buffs, if u want the BM25 function here it is:

Oh man. This optimisation work by tpuf only gets better (see below). I’m starting to believe that if an infra company can’t write architecture docs that include “optimised for X” >= 5 times they may not have “it”. Gotta lean into your thing vs. someone else’s.

Ngl, I hadn’t heard of SPANN (see here) or centroids either. To grok SPANN we need to understand centroids, so let’s start there. Centroids are essentially “representative” vectors, meaning that they themselves aren’t real datapoints in vector space. Instead, they’re at the centre (i.e. the mean) of a group of other real points in space. I’m sure that makes almost no sense… so I’ll break it down via an example:

Let’s go back to our Kith example (hope you all enjoyed this pod). When building our product recommendation engine, we might have 1 million images (i.e. vectors) in our shoe dataset. That’s a lot of data. So, we could use a clustering algorithm like k-means to “cluster” (i.e group) these 1 million vectors into 1 thousand clusters to “shrink” our “search space” a little. We then use centroids to essentially identify (i.e. give it its own point in vector space) each cluster. Smart.

Ok, so, what is SPANN? Or.. wait for it.. “Simple yet efficient Partioning-based Approximate Nearest Neighbour” (😵💫) search. Wow, nothing “simple” about that.

Luckily for us, because all of the work we’ve done in this primer already, it is in fact simple to grok. Basically, the core idea behind SPANN is that it stores centroids in memory (think our NVMe SSD cache) and the underlying vectors that map to these centroids (weirdly, also called “posting lists” despite not being an inverted index) on disk storage (i.e. our vanilla object storage). Simple as that. Naturally, this work leads to another performance bump.

What should we build then? Well, I’ve kept the below advice aggregated blog post up-to-date for some time. So, what if instead of visiting it to look for worldly wisdom, I could just.. query it? (p.s. this page lives on GitHub so add to it via a pull request if ya like).

Very doable with tpuf. I’ll show you how, but let’s see it in action first. What results do I get back for the query “chips on shoulders..”? So cool.

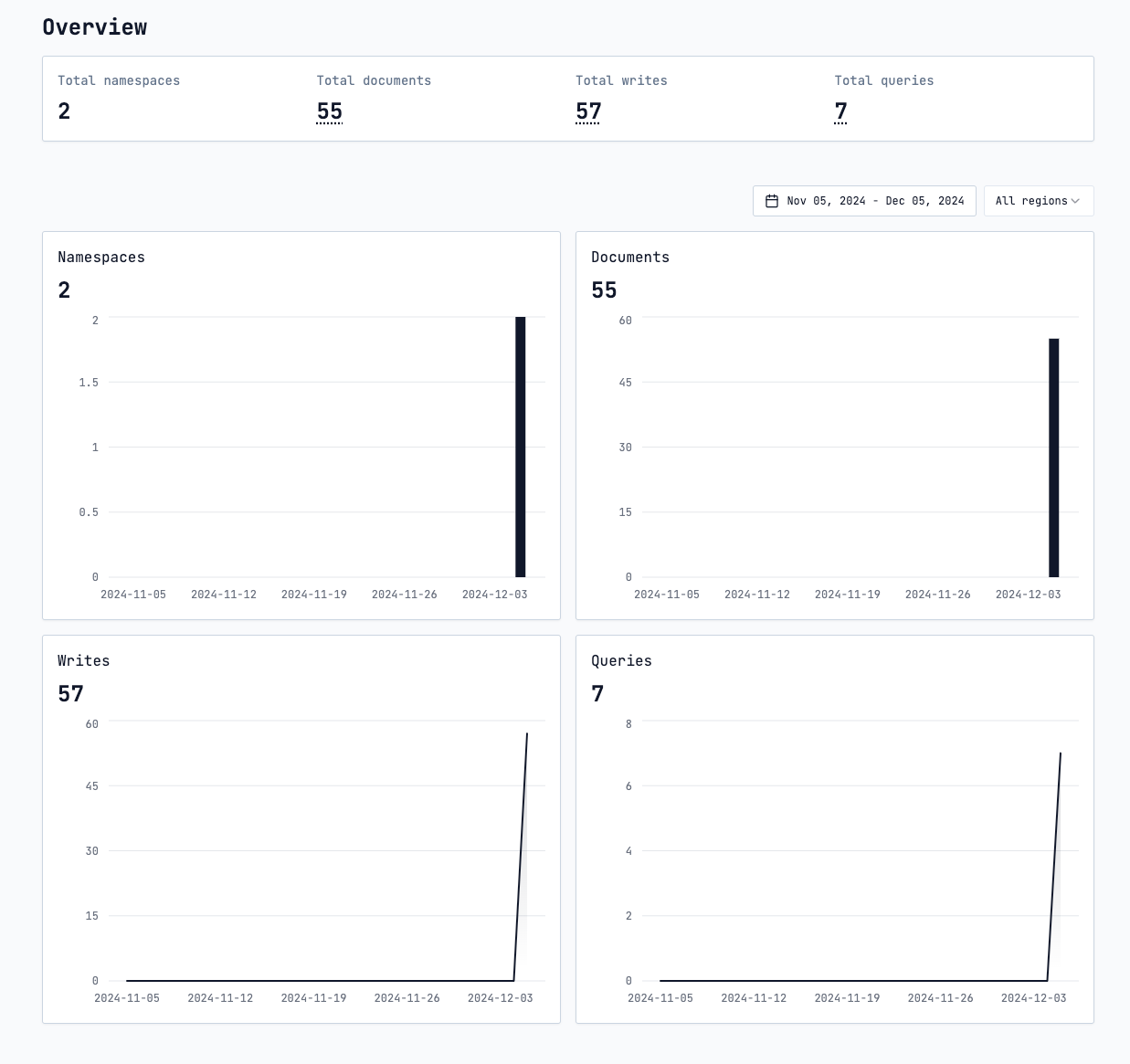

Let’s see this reflected in turbopuffer’s dashboard too, and then I promise we’ll dig into the code. 55 documents (remember, vectors) in there, nice. Looks like tpuf’ll be passing my own “toothbrush test” (see result 10; please laugh at my joke) at this rate.

Ok, now for the code. I feel compelled to add that I don’t think this is in any way a “difficult” application to build. I thought about doing something more challenging with StockX’s Sneaker Data Contest dataset. Maybe over the holidays. Anyway:

import os

from dotenv import load_dotenv

import turbopuffer as tpuf

from openai import OpenAI

# Load environment variables from .env file

load_dotenv()

turbopuffer_key = os.getenv("TURBOPUFFER_API_KEY")

openai_key = os.getenv("OPENAI_API_KEY")

tpuf.api_key = turbopuffer_key

client = OpenAI(api_key=openai_key)

# Create namespace

ns = tpuf.Namespace("advice-aggregated-search2")

# Function to get embeddings from OpenAI with error handling

def get_embedding(text):

try:

response = client.embeddings.create(

model="text-embedding-ada-002",

input=text

)

return response.data[0].embeddingRequest to Substack — make prettier code blocks? Would some colour hurt anyone? Jeez. Anyway, as you can see I’ve bolded what matters in this section of my code. We 1) create a “namespace” (a collection of data) in turbopuffer & 2) create a function that will turn any text we supply (i.e. our advice aggregated list) into word embeddings.

Next, we create an array of “strings” (i.e. text) that contains our list of advice. This is the text that will be the input into our “get_embedding” function above. We then run (or “call”) our `get_embedding( )` function to actually turn this text into vectors. Easy.

advice_items = [

'"People that scare me" - Graham Duncan on hiring Analysts that are capable of being better than you.',

'"Look for infinite problem spaces" - Will Gaybrick on market selection.',

'"Patience is arbitrage" - Mike Maples on the importance of playing long-term games.',

'"In order to make delicious food, you must eat delicious food." - Karri Saarinen on cultivating taste (via Jiro Dreams of Sushi).',

'"Moral authority" - Peter Fenton on a founders ability/right to create change in an industry.',

'"Focus on both music making and conducting" - Josh Kushner on how to refine your investment picking whilst management responsibilities scale.',

'"Unique and compelling value proposition" - Pat Grady on gross margins & operating margins.',

'"Suck the oxygen out of the air" - Pat Grady on winning founder mind share. (about Sarah Guo!)',

'"Do good deals" - Anon on becoming a great investor. "Obvious" - but very easy to climb the wrong hill',

'"Look at the steepness of the slope" - Geoff Lewis on assessing talent.',

...... etc

# Create vectors for each piece of advice

for idx, advice in enumerate(advice_items):

try:

embedding = get_embedding(advice)

if embedding: # Only proceed if we got a valid embedding

ns.upsert(

ids=[f"advice_{idx}"],

vectors=[embedding]

)

print(f"Processed item {idx + 1}/{len(advice_items)}") # Progress indicator

time.sleep(0.5) # Add small delay to avoid rate limits

print("Vectorization complete!")Ok, final part and then we’re all wrapped up. Next, we turn our desired search query (remember, “chips on shoulders”) into a vector too. This will enable us to query our namespace (using the `ns.query( )` function below) by searching for those vectors that are approximately “close” to the query in vector space. We then just print the results.

search_query = "chips on shoulders"

query_embedding = get_embedding(search_query)

# Query the namespace for similar vectors

results = ns.query(

vector=query_embedding, # The embedding of your search query

top_k=10, # Number of results you want to return

distance_metric="cosine_distance", # Assuming cosine distance is appropriate

include_attributes=[], # Adjust based on what attributes you need

include_vectors=False

)

# Print the results

for idx, result in enumerate(results):

# Debugging: Print the result object to understand its structure

print(f"Result {idx + 1}: {result}")

# Assuming result has attributes like 'id' and 'dist', access them directly

item_id = result.id # Accessing as an attribute

score = result.dist # Use 'dist' instead of 'score'

# Get the original advice text using the ID (it will be something like 'advice_27')

original_idx = int(item_id.split('_')[1])

print(f"\nResult {idx + 1}:")

print(f"Text: {advice_items[original_idx]}")

print(f"Similarity Score: {score}")

& that, dear readers, is the end of our primer on turbopuffer. I hope you had as much fun reading as I did writing this one. A special thanks to the tpuf team again. Oh &, subscribe below, and, as always, feel free to say hi at alex@tapestry.vc